Background

We’ve been hearing that artificial intelligence (AI) will dominate our future for decades now. And while we may be numb to some of these predictions in movies, games and pop culture, AI has been creeping into our lives in recent years, whether we know it or not. Machine learning has been especially effective at augmenting technology for everyday use with things like Siri, Google Photos (image recognition), self-driving vehicles and more.

But even with these advancements, it has been easy to dismiss the potential of AI as it improves in future years. Sure, it can help us with specific tasks, but will it ever become close to human intelligence? Sure, it can handle and augment automated tasks, but creative tasks will always be dominated by humans, right?

Right?

In recent months, even the creative side of the AI argument has taken a huge blow as OpenAI’s Dall-E 2 has been slowly rolled out to the public and companies like Google and Facebook have announced similar developments in AI research. Often called ‘prompt to image’ or ‘text to image’, this technology flips the script with image to text available in recent years. Facebook can easily identify your face in any image, and Google can identify nearly any object in any image and make them searchable using image learning.

I’ll leave the detailed description of how prompt to image technology works to others (who understand it better than I) but, put simply, training these systems on billions of images with text descriptions have made it possible to generate mind-blowing, photorealistic images in seconds.

I’ve been watching this from afar over the past few months, considering what effect this and similar technologies will have on our specific corner of the world (3D visualization in civil and transportation). So when I finally received access to Dall-E 2 (the other platforms haven’t allowed public access yet) this past week, I decided to put it through its paces to see how close AI is to augmenting or taking over visualization in our industry.

Predictions

I’ve seen some early comparisons of Dall-E 2 to human work with varying results. I’ve also seen many comments of artists arguing that this technology is far away from the true human touch and will only serve as another tool to augment true human artists.

All that said, I want to be clear that I believe this technology will absolutely disrupt the world. It will put millions out of jobs and spread well past images and into movies, music, video games and other creative creations. Sure, an artist can have control over a creation that AI may never fully have, but if we can generate dozens of images of whatever we can imagine in minutes in the year 22, what chance will artists have in the year 2030? My children will likely grow up in a world where they will speak an idea for a movie and instantly be able to watch it, with quality surpassing our current blockbusters.

So yeah, I’m bullish on this technology and have been curious how it may augment or even disrupt my own business and industry.

I was confident that nothing currently available would replace our products now or any time soon, but my bullish perspective prepared me to see some surprising results.

That said, SPOILER ALERT, I was barely able to produce anything that wasn’t pure visual garbage.

Transportation Visualization Results

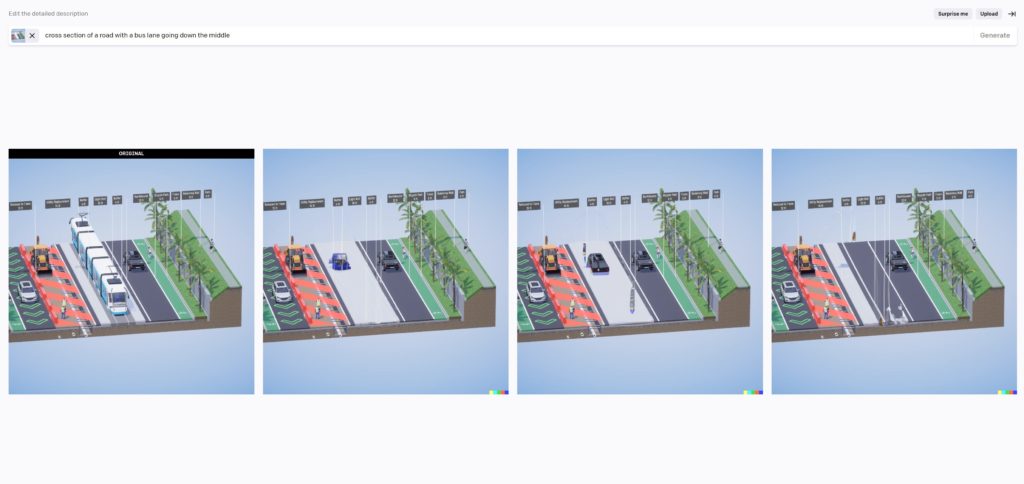

Dall-E 2 can create images in seconds from any text prompt, which was my first test. It also allows you to edit or recreate previously rendered images, which I tested later.

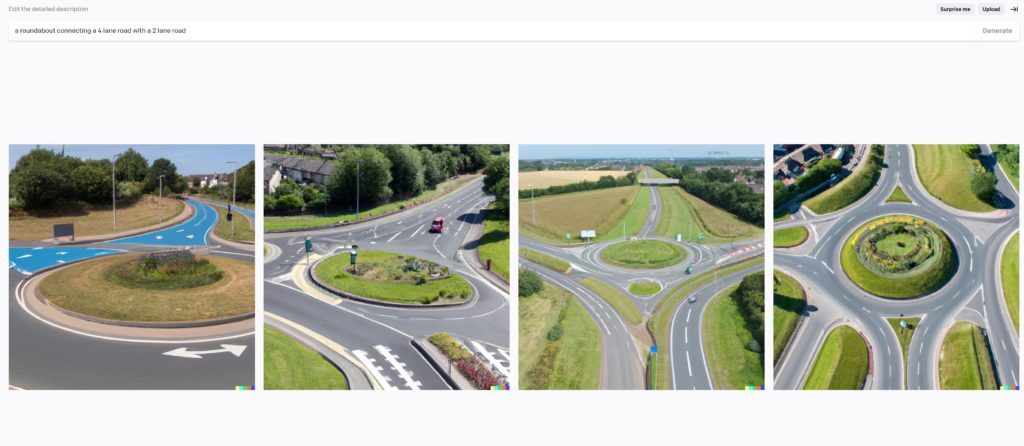

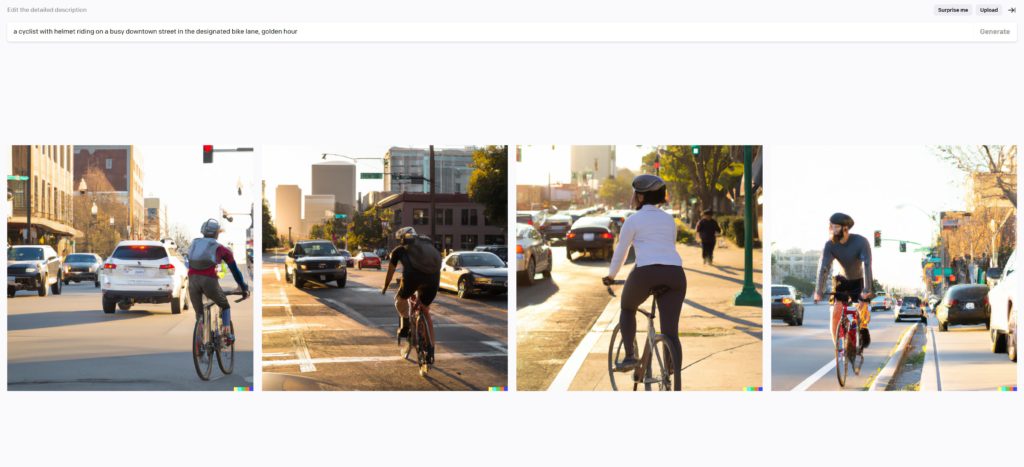

First, a simple test:

From a distance, these look believable. Upon closer inspection, however, these images and the associated designs, really fall apart. The striping is wrong. The roads would never work. The images- and the roads- just make no sense.

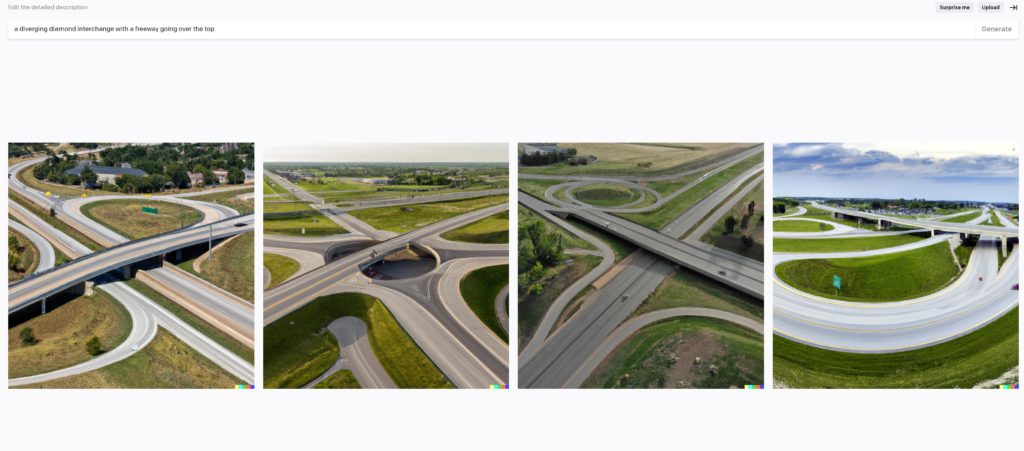

I did a few similar tests with similar results. Dall-E 2 didn’t know what a diverging diamond interchange was, nor how to represent it.

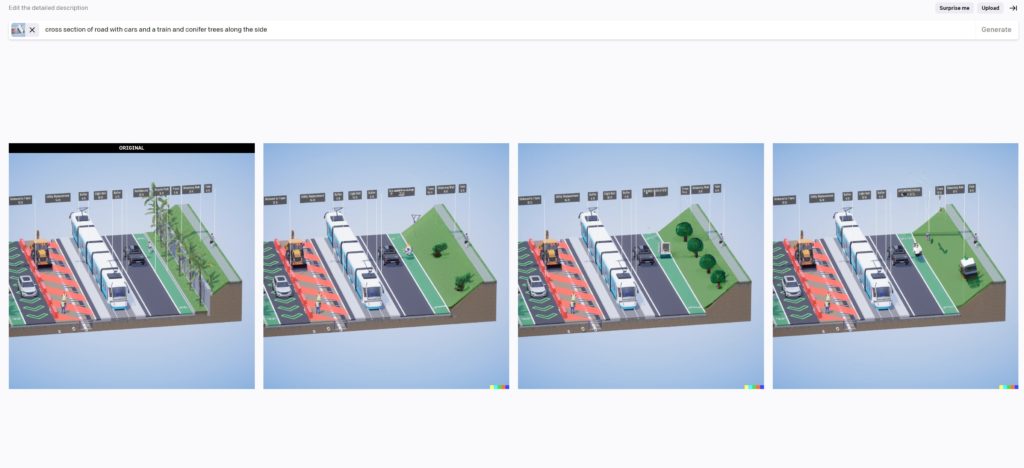

Beyond Typicals Style Results

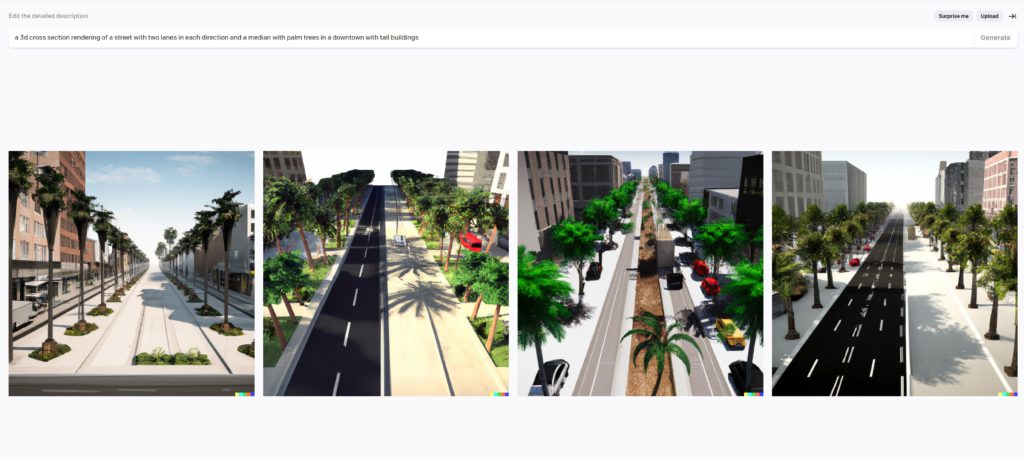

While Beyond CAD is a more general civil visualization tool capable of presenting nearly any type of project, Beyond Typicals is a much more specific type of visualization and aesthetic, so I thought the results might be better. However, the results were similar.

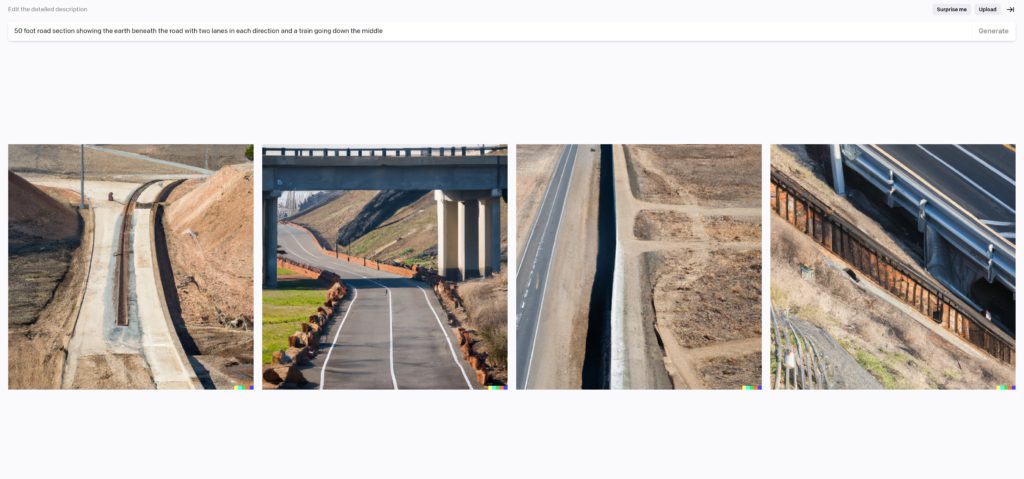

Non-Specific Results

I was determined to show that Dall-E 2 could produce useable results in some manner, so I tried a different tactic. If the detail of transportation projects was too complex to handle, what if I did something more narrative that I might be able to use as part of a proposal? This was where Dall-E 2 came closest to producing something close to useable, but most of these were similar visual confusion.

While the three pictures on the right are not just wrong but dangerous (driving head-on with a truck?!), the picture on the left is almost usable from a distance. Never mind the running a red light nor the floating streetlights or the garbled detail of the bike and rider, but it does seem to be almost passable and with enough prompts and testing images like these might take the place of stock images.

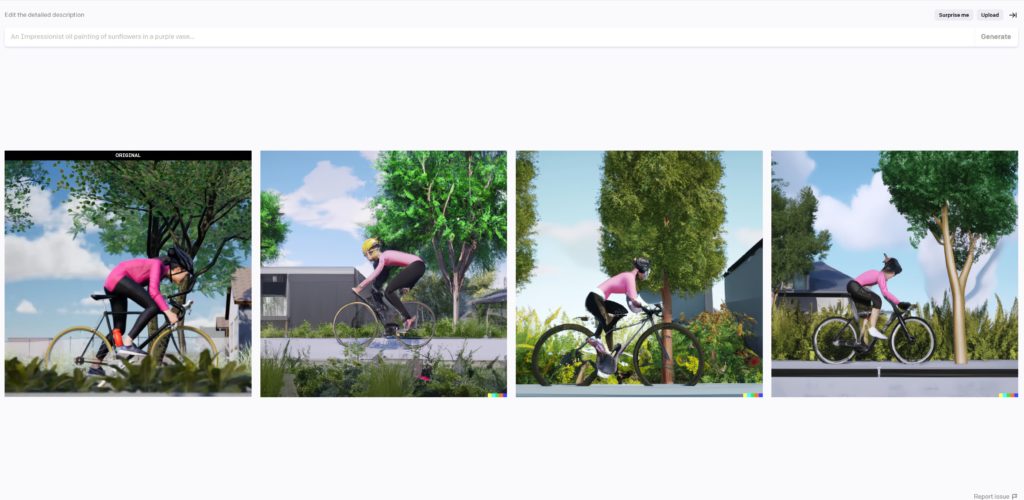

Image Editing

Alright, so Dall-E 2 clearly struggles with the specifics and details of transportation, but what if we used it to edit previously rendered images? I used a variety of Beyond CAD and Beyond Typicals renders and used both the ‘create similar images’ feature and the ‘inpainting’ feature to see how it would far. Again, no matter what I threw at it, the results were consistently disappointing. Oh, and I should probably point out that Dall-E 2 rarely handles text well, which is a problem for any sort of sign (Google Imagen has shown better results with text)

Inpainting

Inpainting allows the Dall-E 2 user to replace a specific part of an image with AI generated parts. It seemed confused at both what the original image was and what I was trying to replace in these tests.

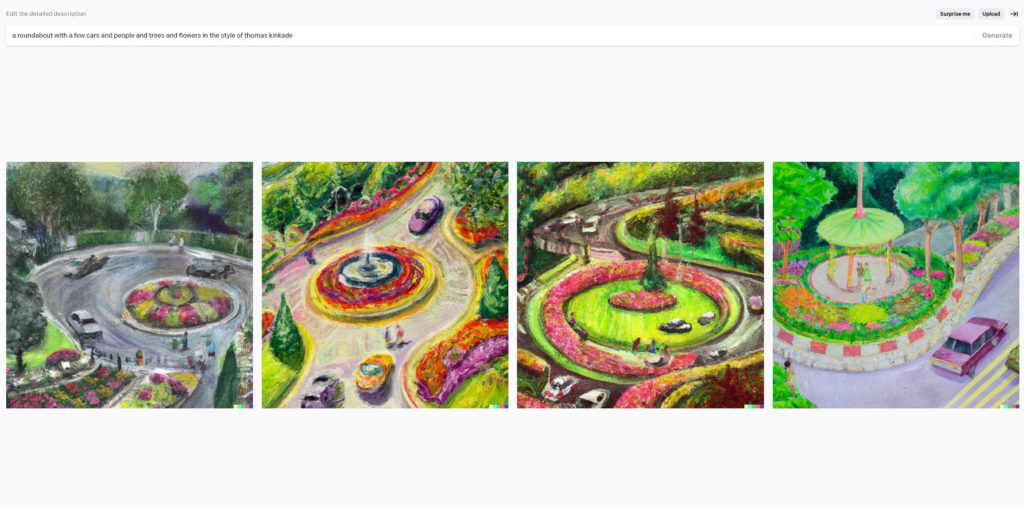

Non-Transportation Results

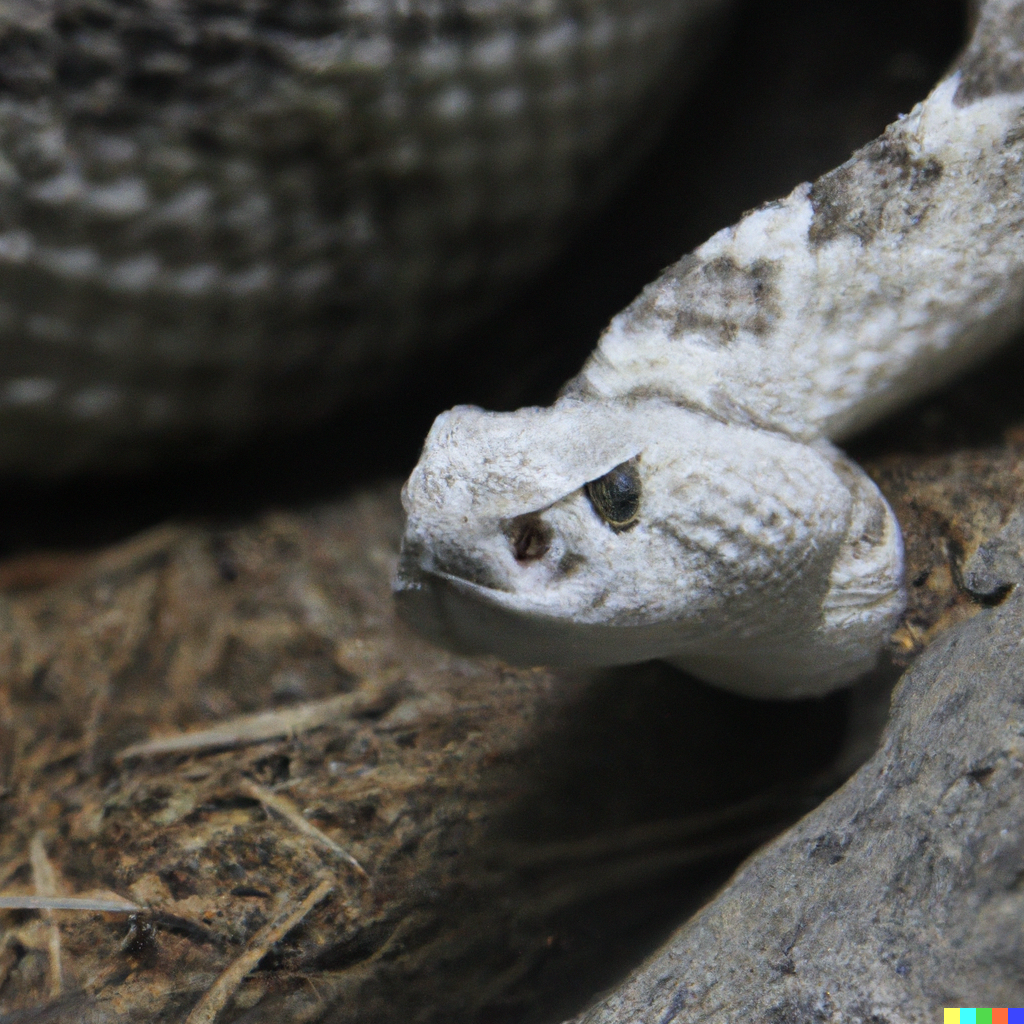

I want to be clear that using Dall-E 2 outside these transportation related tests has been mostly a delight. It can absolutely produce spectacular images in seconds. Below are some of my non-transportation related favorites (with some influence from my kids).

Conclusion

Dall-E 2 seems to be the forefront of a revolutionary and disruptive technology. It will quickly shake up industries like stock photos, product design, thumbnail generation, art production and more.

But what about 3D visualization in civil and transportation? Well, I initially thought this industry would start to see this technology used within the next few years, but after testing myself it seems further off. The unique detail and specific variables associated with these complex projects are just too much for AI to handle now and in the near future. Design elements like striping, lane design, landscape and aesthetics and more just confuse the system. And while Dall-E 2 and similar technologies can handle complex elements like lighting, refraction, shadows, hair and more, it has been trained on those subjects over billions of pictures with consistent results while roads and highways are neither as common nor consistent. Something like a 3D typical section is difficult for Dall-E to handle because there are probably so few of them in the training photos and even those probably aren’t all labeled consistently.

Oh, and this is just as it relates to image renders. Looking for a full 3D flythrough of your project? That is much further out than the simple image renders, not to mention real-time or virtual reality 3D visualization.

I would imagine that inpainting (and similar technologies) will be the first uses of this technology in conjunction with 3D CAD design and visualization. And inasmuch as this relates to Beyond CAD, Beyond Typicals and any other future products we have? We will definitely keep our eye out to integrate these tools with our own products for the best and most efficient results.

AI will is changing the world and these technologies prove that it may happen faster than we previously imagined. All of that said, however, a very specific use and niche like what is made possible with Beyond CAD and Beyond Typicals will still need the talented engineers, CAD designers, artists and software for the foreseeable future.